Prototype #3: User-Defined Gestures with Machine Learning

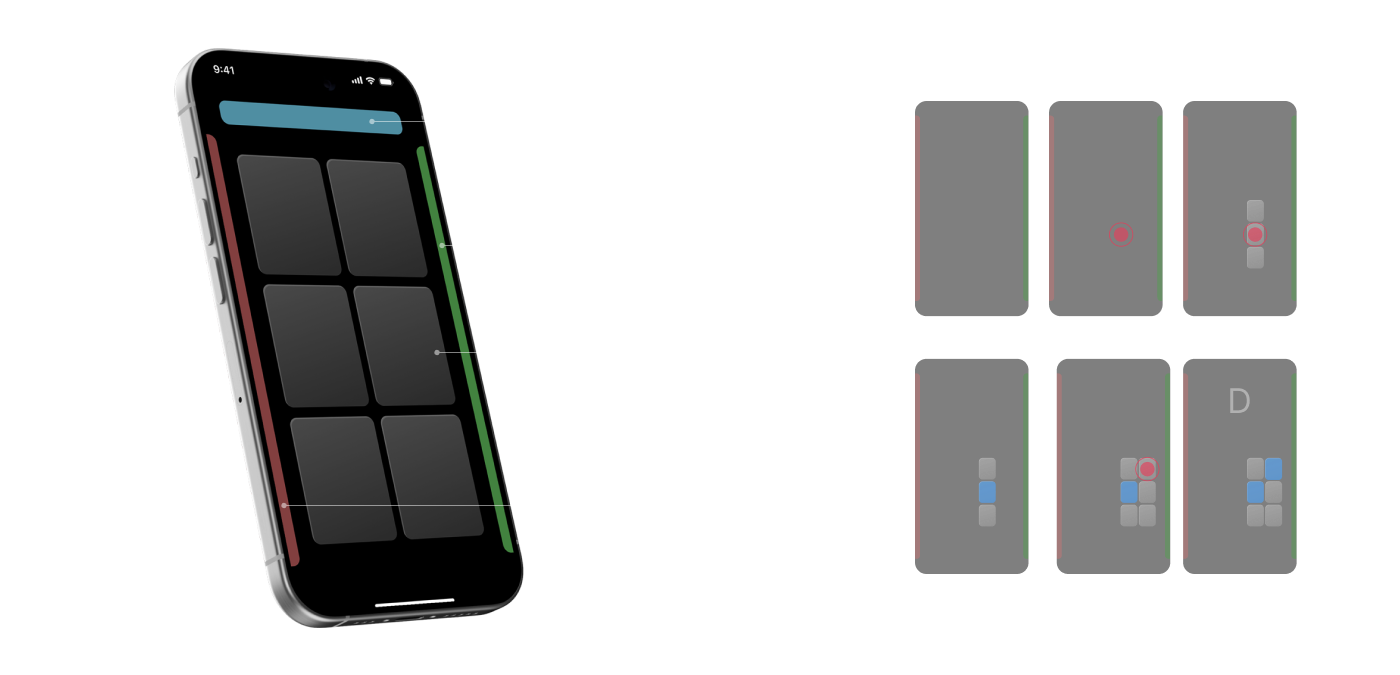

To address the feedback, I collaborated with a software engineer to integrate a machine learning system into my prototype.

This new prototype could map user-defined gestures to specific commands and actions, creating a personalized experience for each user and allow the system to learn each user’s unique gestures over time.

UX research

I partnered with a UX researcher to conduct a series of testing and interviews at a school for the blind near Berkeley CA.

We interviewed dozens of users and received many praises for the interface we created. Many students were excited about the potential application in specific apps and the type of control this could give them.

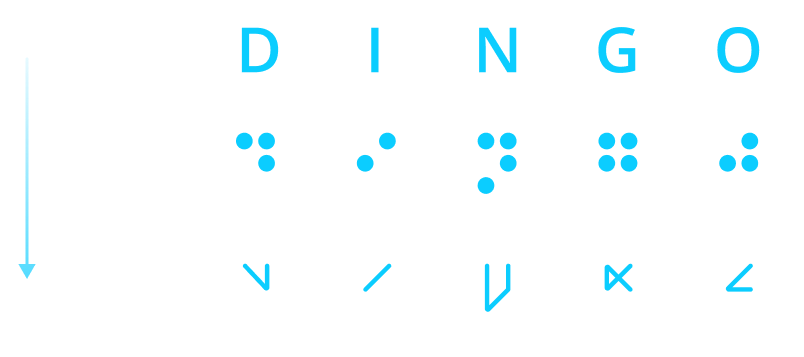

During our research, we also uncovered a generational shift in technology use: younger blind users were less and less fluent in braille and relied more and more on voice commands.

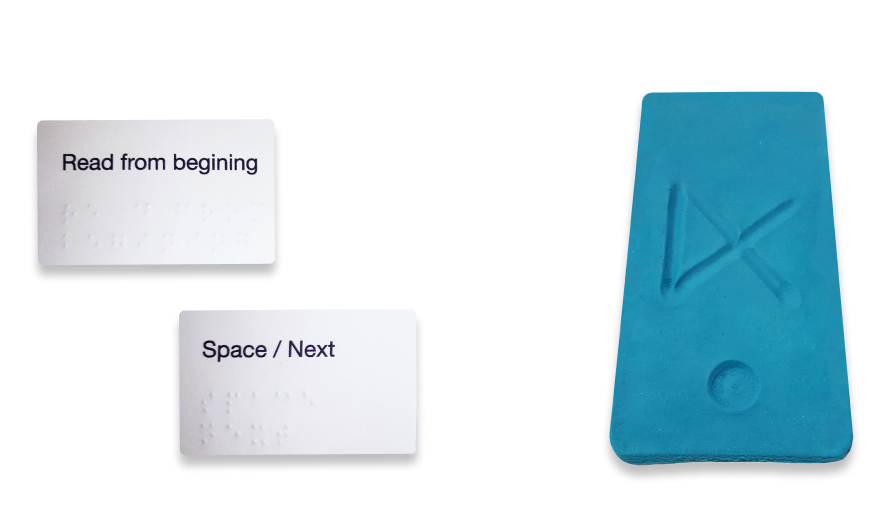

An unexpected finding was the concern many blind users had about safety and theft when using their expensive phones in public. Several participants mentioned feeling uneasy, worrying that someone might snatch their phone from their hands or even from a table. To address this, my next prototype will be a discreet physical device that allows users to control their phones without needing to take them out of their bags. This solution will be OS-agnostic, designed for ease of use and addressing similar pain points then the gesture system.